In this article, we'll look into the critical importance of DataOps and MLOps in software and AI development. We will showcase a hands-on MVP approach, emphasizing the use of DVC (Data Version Control) and CML (Continuous Machine Learning), integrated with Git, to illustrate these concepts effectively.

- Practical Approach: Using DVC and CML, we demonstrate a real-world, minimal viable product (MVP) approach to DataOps and MLOps.

- Integration with Git: Highlighting the seamless integration of these tools with Git, we show how familiar workflows can be enhanced for data and model management.

- Effective Implementation: Our goal is to provide clear guidance on effectively implementing DataOps and MLOps practices.

Common Issues in AI & Data Projects

- "Which Data Version?"Are you constantly losing track of the data version used for model training?

- "Is the New Model Any Good?"Stop wondering if your latest model beats the old one or what changed between them.

- "Why's Our Repo So Heavy?" Bloated GitHub repository with data?

What is Understanding DataOps and MLOps

DataOps and MLOps are foundational practices for modern software development, particularly in AI. These approaches are essential for effectively managing the data and machine learning model lifecycles.

- Scalability: Efficiently managing data (DataOps) and machine learning models (MLOps) is critical to building scalable and robust AI systems, crucial for software development projects.

- Performance and Reliability: Implementing these practices ensures consistent system performance and reliability, which is especially vital for startups operating in dynamic and resource-constrained environments.

- Pitfalls to Avoid: Many development teams need to correctly version data and models or take a reactive approach to system management, leading to significant challenges in reproducibility and increased error rates, hindering growth and innovation.

Understanding and integrating DataOps and MLOps into workflows is not just beneficial; it's a strategic necessity.

The MVP Approach

The MVP (Minimal Viable Product) approach in DataOps and MLOps is all about aligning with the core principles of the Agile Manifesto, emphasizing simplicity, effectiveness, and deployment.

- Agile Principles: Emphasize simplicity, effectiveness, and people-first processes, promoting flexibility and responsiveness in project management.

- Reducing Dependency on Complex Systems: Advocate for minimizing reliance on complex SaaS and proprietary systems, thus maintaining control and flexibility in your development.

- Effective Tools: Leverage tools like DVC and CML that integrate with familiar Git workflows; this approach ensures seamless adoption and enhances team collaboration and efficiency.

Adopting an MVP approach means creating more agile, adaptable, and efficient workflows in DataOps and MLOps, allowing for the development of robust and scalable solutions without getting bogged down by unnecessary complexities.

Hands-On

Now, we dive into the practical aspects of setting up a Python environment and using essential tools like DVC, CML, and SciKit-Learn. We'll go through configuring a GitHub repository for effective version control and demonstrate building and evaluating a model using SciKit-Learn in a Jupyter Notebook.

- Setup: Set a Python environment and install DVC, CML, and SciKit-Learn.

- Model Building: Use SciKit-Learn with a built-in dataset in a Jupyter Notebook for a simple model training and evaluation demonstration.

- Streamlined Process: Configure GitHub and Git to execute and assess your model.

Install Python Environment

We'll use Poetry to manage our Python environment. Poetry is a Python dependency management tool that allows you to create reproducible environments and easily install packages.

# Install Poetry

pipx install poetry

# Init Poetry project

poetry init

# Add dependencies

poetry add dvc cml scikit-learnLoading the Data

We'll use the Breast Cancer Data Set from the UCI Machine Learning Repository.

Key characteristics:

- Number of Instances: 569.

- Number of Attributes: 30 numeric, predictive attributes, plus the class.

- Attributes: Measurements like radius, texture, perimeter, area, smoothness, compactness, concavity, concave points, symmetry, and fractal dimension.

- Class Distribution: 212 Malignant, 357 Benign.

import sklearn.datasets

# Load dataset

data = sklearn.datasets.load_breast_cancer(as_frame=True)

print(data.data.info())Implementing External Settings for Data and Model Adjustments

We'll use external configuration files, like settings.toml, to dynamically adjust data and model parameters. This approach adds flexibility to our project and underscores the importance of versioning and tracking changes, especially when introducing intentional alterations or "bugs" for demonstration purposes.

Degrading the Data with External Settings

Because the demonstration dataset performs well with a simple model, we'll artificially degrade the data to emphasize the importance of tracking changes and versioning.

- External Configuration: Utilize settings.toml to set parameters like num_features=1, which dictates the number of features to be used from the dataset.

- Data Manipulation: We dynamically alter our data by reading the num_features setting from settings.toml. For instance, reducing the dataset to only one feature:

python

import toml

settings = toml.load("settings.toml")

data.data = data.data.iloc[:, : settings["num_features"]]

print(data.data.info())Training the Model

We'll use SciKit-Learn to split the data and train a simple model.

python

import sklearn.model_selection

# Split into train and test

X_train, X_test, y_train, y_test = sklearn.model_selection.train_test_split(

data.data, data.target, test_size=0.3, random_state=42

)python

import sklearn.linear_model

# Train a simple logistic regression model

model = sklearn.linear_model.LogisticRegression(max_iter=1000)

model.fit(X_train, y_train)python

# Evaluate the model

predictions = model.predict(X_test)

accuracy = sklearn.metrics.accuracy_score(y_test, predictions)

print(f"Model Accuracy: {accuracy:.2f}")Model Accuracy: 0.91

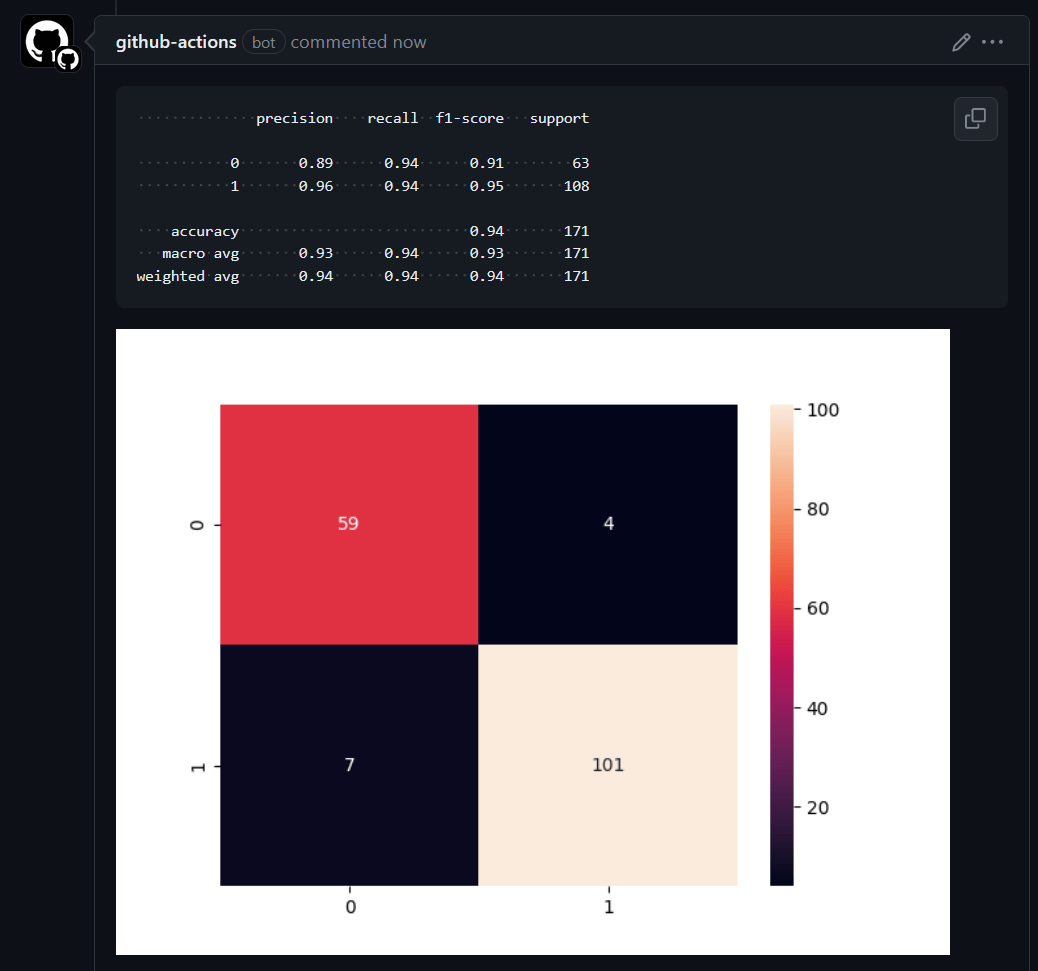

python

# View the classification report

report = sklearn.metrics.classification_report(y_test, predictions)

print(report)

# Export the report to a file

with open("report.txt", "w") as f:

f.write(report) precision recall f1-score support

0 0.93 0.83 0.87 63

1 0.90 0.96 0.93 108

accuracy 0.91 171

macro avg 0.92 0.89 0.90 171

weighted avg 0.91 0.91 0.91 171python

import seaborn as sns

import matplotlib.pyplot as plt

# Create a confusion matrix

confusion_matrix = sklearn.metrics.confusion_matrix(y_test, predictions)

# Plot the confusion matrix

sns.heatmap(confusion_matrix, annot=True, fmt="d")

# Export the plot to a file

plt.savefig("confusion_matrix.png")Saving the Model and Data

We'll save the model and data locally to demonstrate DVC's tracking capabilities.

python

from pathlib import Path

# Save data

Path("data").mkdir(exist_ok=True)

data.data.to_csv("data/data.csv", index=False)

data.target.to_csv("data/target.csv", index=False)python

import joblib

# Save model

Path("model").mkdir(exist_ok=True)

joblib.dump(model, "model/model.joblib")Implementing Data and Model Versioning with DVC

Until now, we have covered the standard aspects of AI and machine learning development. We're now entering the territory of data versioning and model tracking. This is where the real magic of efficient AI development comes into play, transforming how we manage and evolve our machine-learning projects.

- Better Operations: Data versioning and model tracking are crucial for AI project management.

- Data Versioning: Efficiently manage data changes and maintain historical accuracy for model consistency and reproducibility.

- Model Tracking: Start tracking model iterations, identify improvements, and ensure progressive development.

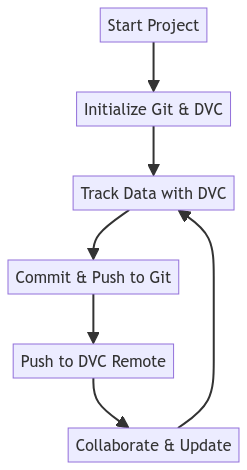

Streamlining Workflow with DVC Commands

To effectively integrate Data Version Control (DVC) into your workflow, we break down the process into distinct steps, ensuring a smooth and understandable approach to data and model versioning.

Initializing DVC

Start by setting up DVC in your project directory. This initialization lays the groundwork for subsequent data versioning and tracking.

dvc init

Setting Up Remote Storage

Configure remote storage for DVC. This storage will host your versioned data and models, ensuring they are safely stored and accessible.

dvc remote add -d myremote /tmp/myremote

Versioning Data with DVC

Add your project data to DVC. This step versions your data, enabling you to track changes and revert if necessary.

dvc add data

Versioning Models with DVC

Similarly, add your ML models to DVC. This ensures your models are also versioned and changes are tracked.

dvc add modelCommitting Changes to Git

After adding data and models to DVC, commit these changes to Git. This step links your DVC versioning with Git's version control system.

git add data.dvc model.dvc .gitignore

git commit -m "Add data and model"Pushing to Remote Storage

Finally, push your versioned data and models to the configured remote storage. This secures your data and makes it accessible for collaboration or backup purposes.

dvc push

Tagging a Version

Create a tag in Git for the current version of your data:

git tag -a v1.0 -m "Version 1.0 of data"Updating and Versioning Data

- Make Changes to Your Data:

-Modify your data.csv as needed. - Track Changes with DVC:

-Run dvc add again to track changes:

dvc add data- Commit the New Version to Git:

-Commit the updated DVC file to Git:

git add data.dvc

git commit -m "Update data to version 2.0"- Tag the New Version:

-Create a new tag for the updated version:

git tag -a v2.0 -m "Version 2.0 of data"Switching Between Versions

- Checkout a Previous Version:

-To revert to a previous version of your data, use Git to checkout the corresponding tag:

git checkout v1.0- Revert Data with DVC:

-After checking out the tag in Git, use DVC to revert the data:

dvc checkoutUnderstanding Data Tracking with DVC

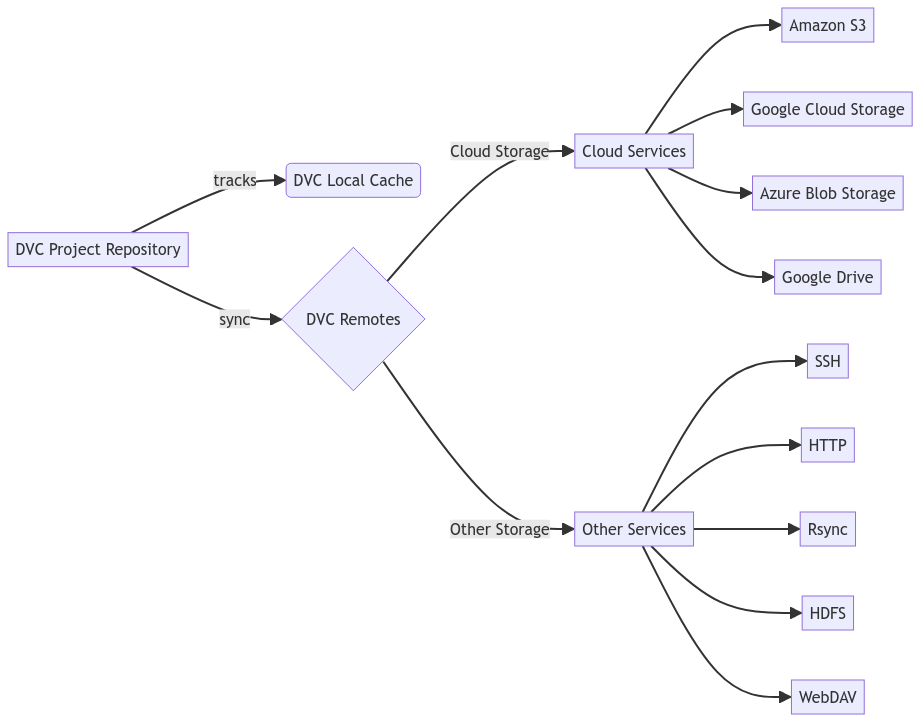

DVC offers a sophisticated approach to data management by tracking pointers and hashes to data rather than the data itself. This methodology is particularly significant in the context of Git, a system not designed to efficiently handle large files or binary data.

How DVC Tracks Data

- Storing Pointers in Git:

-DVC stores small .dvc files in Git. These pointers reference the actual data files.

-Each pointer contains metadata about the data file, including a hash value uniquely identifying the data version.

- Hash Values for Data Integrity:

-DVC generates a unique hash for each data file version. This hash ensures the integrity and consistency of the data version being tracked.

-Any change in the data results in a new hash, making it easy to detect modifications.

- Separating Data from Code:

-Unlike Git, which tracks and stores every version of each file, DVC keeps the actual data separately in remote storage (like S3, GCS, or a local file system).

-This separation of data and code prevents bloating the Git repository with large data files.

Importance in the Context of Git

- Efficiency with Large Data:

-Git struggles with large files, leading to slow performance and repository bloat. DVC circumvents this by offloading data storage.

-Developers can use Git as intended – for source code – while DVC manages the data.

- Enhanced Version Control:

-DVC extends Git's version control capabilities to large data files without taxing Git's infrastructure.

-Teams can track changes in data with the same granularity and simplicity as they track changes in source code.

- Collaboration and Reproducibility:

-DVC facilitates collaboration by allowing team members to share data easily and reliably through remote storage.

-Reproducibility is enhanced as DVC ensures the correct alignment of data and code versions, which is crucial in data science and machine learning projects.

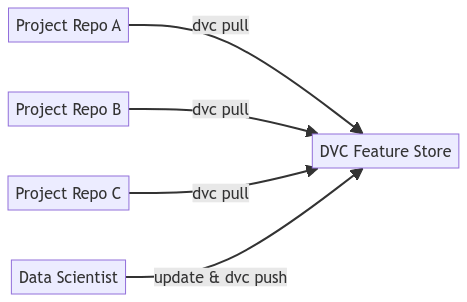

Using DVC as a Feature Store

DVC can be a feature store in machine learning workflows. It offers advantages such as version control, reproducibility, and collaboration, streamlining the management of features across multiple projects.

What is a Feature Store?

A feature store is a centralized repository for storing and managing features - reusable pieces of logic that transform raw data into formats suitable for machine learning models. The core benefits of a feature store include:

- Consistency: Ensures uniform feature calculation across different models and projects.

- Efficiency: Reduces redundant computation by reusing features.

- Collaboration: Facilitates sharing and discovering features among data science teams.

- Quality and Compliance: Maintains a single source of truth for features, enhancing data quality and aiding in compliance with data regulations.

Benefits of DVC in Feature Management

- Version Control for Features: DVC enables version control for features, allowing tracking of feature evolution.

- Reproducibility: Ensures each model training is traceable to the exact feature set used.

- Collaboration: Facilitates feature-sharing across teams, ensuring consistency and reducing redundancy.

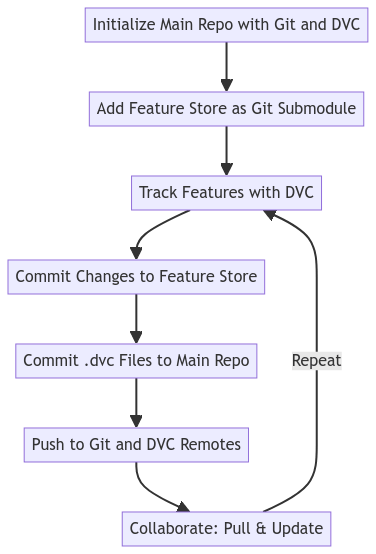

Setting Up DVC as a Feature Store

- Organizing Feature Data: Store feature data in structured directories within your project repository.

- Tracking Features with DVC: Use DVC to add and track feature files (e.g., dvc add data/features.csv).

- Committing Feature Changes: Commit changes to Git alongside .dvc files to maintain feature evolution history.

Using DVC for Feature Updates and Rollbacks

- Updating Features: Track changes by rerunning dvc add on updated features.

- Rollbacks: Use dvc checkout to revert to specific feature versions.

Best Practices for Using DVC as a Feature Store

- Regular Updates: Keep the feature store up-to-date with regular commits.

- Documentation: Document each feature set, detailing source, transformation, and usage.

- Integration with CI/CD Pipelines: Automate feature testing and model deployment using CI/CD pipelines integrated with DVC.

Implementing a DVC-Based Feature Store Across Multiple Projects

- Centralized Data Storage: Choose shared storage that is accessible by all projects and configure it as a DVC remote.

- Versioning and Sharing Features: Version control feature datasets in DVC and push them to centralized storage. Share .dvc files across projects.

- Pulling Features in Different Projects: Clone repositories and pull specific feature files using DVC, enabling their integration into various workflows.

Best Practices for Managing a DVC-Based Feature Store Across Projects

- Documentation: Maintain comprehensive documentation for each feature.

- Access Control: Implement mechanisms to regulate access to sensitive features.

- Versioning Strategy: Develop a clear strategy for feature versioning.

- Automate Updates: Utilize CI/CD pipelines for updating and validating features.

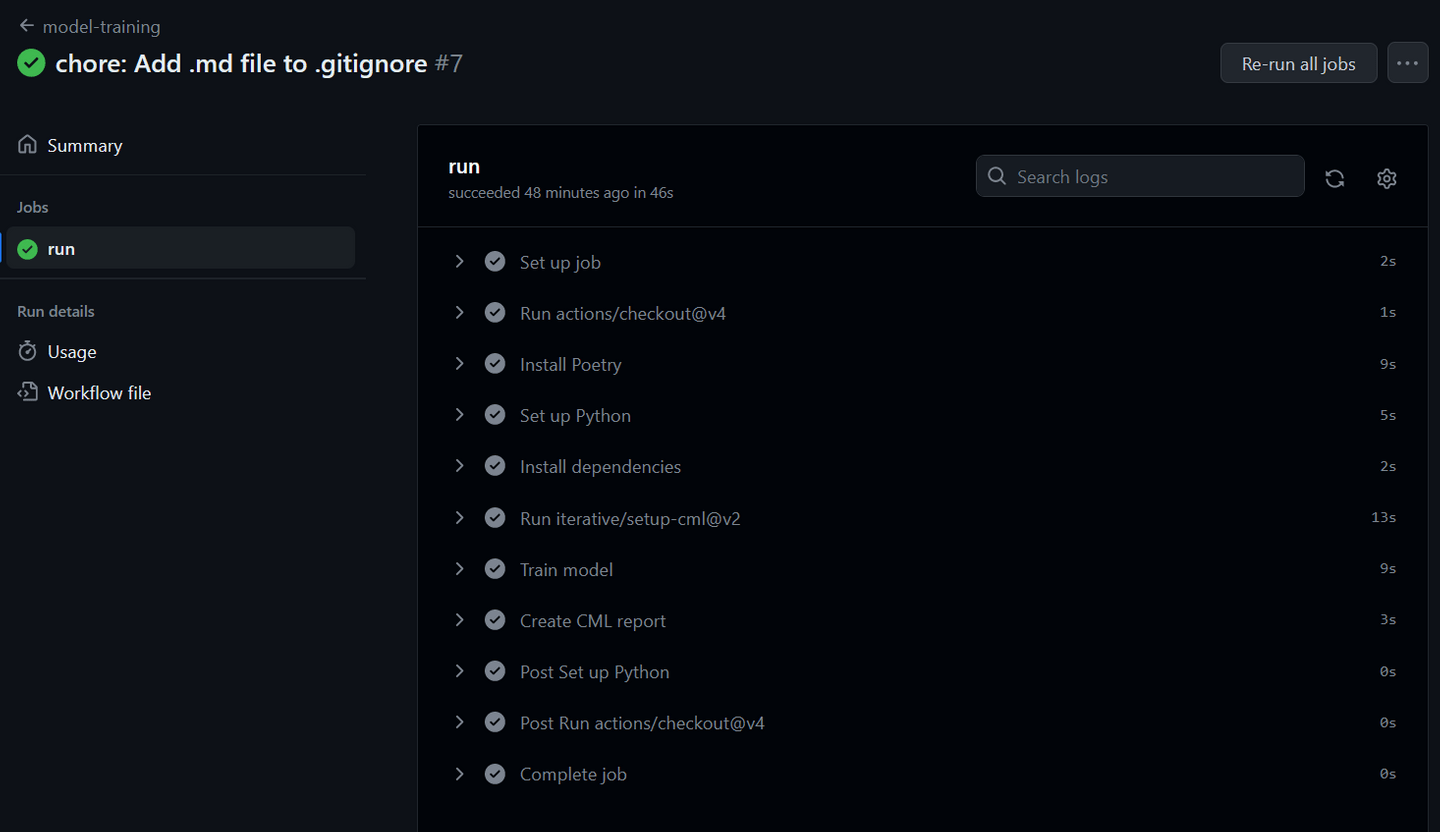

Streamlining ML Workflows with CML Integration

Integrating Continuous Machine Learning (CML) is a game-changer for CI/CD in machine learning. It automates critical processes and ensures a more streamlined and efficient workflow.

Setting Up CML Workflows

Create a GH Actions workflow within your GitHub repository, ensuring it is configured to run on every push or PR.

name: model-training

on: [push]

jobs:

run:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Install Poetry

run: pipx install poetry

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: "3.10"

cache: "poetry"

- name: Install dependencies

run: poetry install --no-root

- uses: iterative/setup-cml@v2

- name: Train model

run: |

make run

- name: Create CML report

env:

REPO_TOKEN: ${{ secrets.GITHUB_TOKEN }}

run: |

echo "\`\`\`" > report.md

cat report.txt >> report.md

echo "\`\`\`" >> report.md

echo "" >> report.md

cml comment create report.md

Conclusion: Boosting Software and AI Ops

In wrapping up, we've delved into the core of DataOps and MLOps, demonstrating their vital role in modern software development, especially in AI. By mastering these practices and tools like DVC and CML, you're learning new techniques and boosting your skillset as a software developer.

- Stay Agile and Scalable: Adopting DataOps and MLOps is essential for developing in the fast-paced world of AI and keeping your projects agile and scalable.

- Leverage Powerful Tools: Mastery of DVC and CML enables you to manage data and models efficiently, making you a more competent and versatile developer.

- Continuous Learning and Application: The journey doesn’t end here. The true potential is realized in continuously applying and refining these practices in your projects.

This is more than just process improvement; it's about enhancing your development workflows to meet the evolving demands of AI and software engineering.