Development

Unleashing innovation at our 16-hour AI hackathon

At Osedea, we thrive on pushing the boundaries of innovation, and our latest internal hackathon was a testament to that spirit. Over an intense and exhilarating 16-hour period, our team came together to explore the fascinating world of artificial intelligence, delving into computer vision, natural language processing, diffusion, and machine learning.

The event was not just another hackathon, it was an extraordinary gathering of brilliant minds fueled by creativity and a passion for technology. With our partnership with Boston Dynamics, we had two agile robots, Spot and Dot, at our disposal to experiment with. Additionally, Modal Labs generously sponsored us with $5000 in serverless compute credits, equipped with high-end GPUs like the H100s, allowing us to power through complex AI computations.

The atmosphere was electrifying as developers, alongside our dedicated AI team and even a UI/UX designer, collaborated to bring their innovative ideas to life. Our goal was not only to create groundbreaking projects but also to refine our software development lifecycle by ensuring every developer gained a deep understanding of AI. Five teams, each consisting of 3-4 members, embarked on this journey, each with unique ideas and perspectives. This blog post provides a detailed overview of each team's project, highlighting the creativity, collaboration, and cutting-edge technology that defined our 16-hour AI hackathon. Dive in and discover how we at Osedea are shaping the future of software development and AI.

Team 1: Can I Park Here?

Cedric L'Homme, Christophe Couturier, Daehli Nadeau

Frustrated by the confusing parking signs in Montreal, team 1 decided to tackle the challenge of determining if a car can be parked at a given location based on a picture of a parking sign using modern computer vision techniques.

The initial step involved collecting images of parking signs around the office. These images were captured on foot or by bicycle, with GPS enabled on the phone to record the location of each picture.

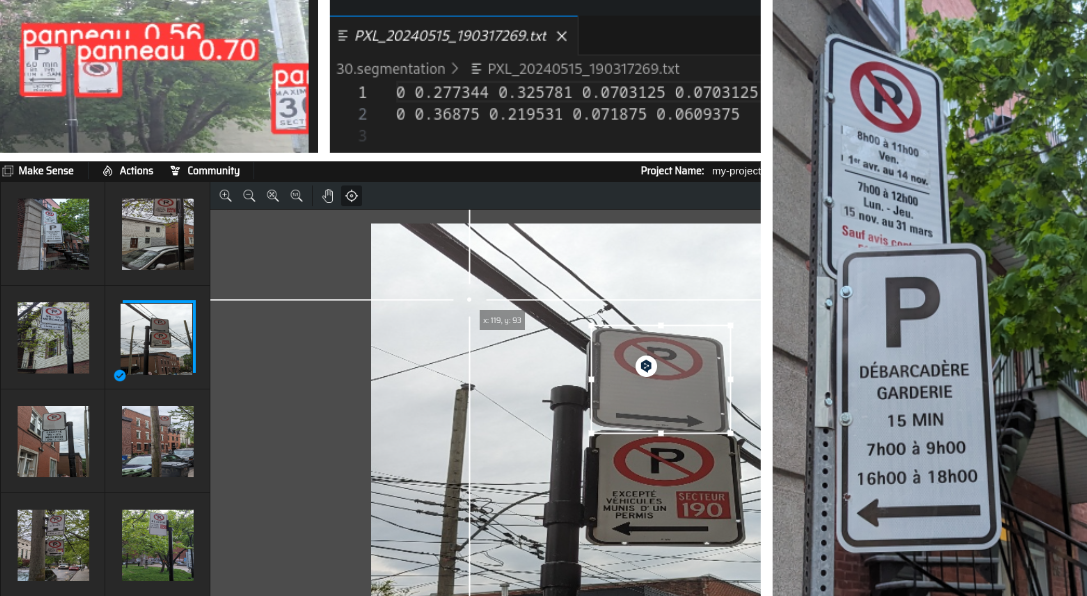

To meet the requirements of the Yolov5 model, which needs 640 x 640 pixel images, Team 1 wrote a quick Python script to resize the collected images. Once resized, the images were uploaded to Makesense to label the parking signs. The output from Makesense was formatted to be compatible with the Yolov5 model structure within the Ultralytics application. The resized images and their corresponding coordinates from Makesense were then used to train the Yolov5 model in the Ultralytics application.

The next step was to evaluate the model's performance on new images that were not part of the training dataset. If the model detected a parking sign, the original high-quality image was used. The bounding box from the model's inference was applied to the original image, and the image was cropped to isolate the parking sign.

An OCR model then read the text on the sign and extracted relevant information. This extracted text was compared to public data from the city to find the closest matching parking sign. The extracted text was then passed to a Large Language Model (LLM) along with the current date and time to answer the question, "Can we park here now?". Although there were some inconsistencies in the LLM's responses, team 1 did manage to get some answers! (though they pointed out that the solution is far from perfect and would need refinement)

Finally, the GPS location was used to fetch open data from the Montreal platform to gather information about other signs in the area. This data was then plotted on a map!

Although this project proved to be quite difficult due to obstructions like bent signs and vandalism, this project served as an excellent introduction to training models, deploying models, and interpreting data in a real-world scenario. It showcased how AI can be utilized to transform a common task into a more efficient and informative process.

Team 2: AI Travel Companion

Antoine Cadotte, Cimon Tremblay, Maxime Soares, Zack Therrien

Imagine embarking on a journey where your travel companion is an AI that crafts personalized experiences just for you. That's exactly what Hackathon Team 2 envisioned and brought to life with their innovative project, the AI Travel Companion.

The initial concept was to create an AI that not only assisted travelers but also enriched their journeys with unique and interactive features. The AI Travel Companion is equipped with advanced functionalities such as generating music tailored to your trip, vocally answering questions about local history, and even speaking in a local accent to enhance the immersive experience. This thoughtful detail makes interactions feel more authentic and engaging.

Building this companion involved an intricate architecture and multiple pipelines. The frontend was developed using React, integrating with Google Maps for location-based features. The team utilized a combination of models: Vosk for speech recognition, a fine-tuned GPT-2 for Q&A, and text-to-speech model that could speak with a local accent. They also harnessed the power of MusicGEN for creating custom travel tunes and used Google Knowledge Graph for fetching detailed information about nearby monuments.

This project was not without its challenges and, as we say, the journey is as important as the destination! Among others, the team had to navigate interactions between several deployed models and tackled inference latency to ensure the assistant responded promptly. This proved to be a solid case study, reproducing the issues faced by many real-life projects.

Although the team's initial vision was quite ambitious for the timeframe of the Hackathon, the resulting app served as an interesting proof of concept for an audio-first travel assistant, demonstrating how AI can transform generic travel guides into a more personalized and engaging adventure. Whether you want historical tidbits, local music, or just a friendly voice with a local accent, the AI Travel Companion hints at a future where journeys are enriched by technology at every step!

Team 3: Pigeon Fury Kombat (PFK)

Annie Durand, Armand Brière, Julien Lin, Kyle Gehmlich

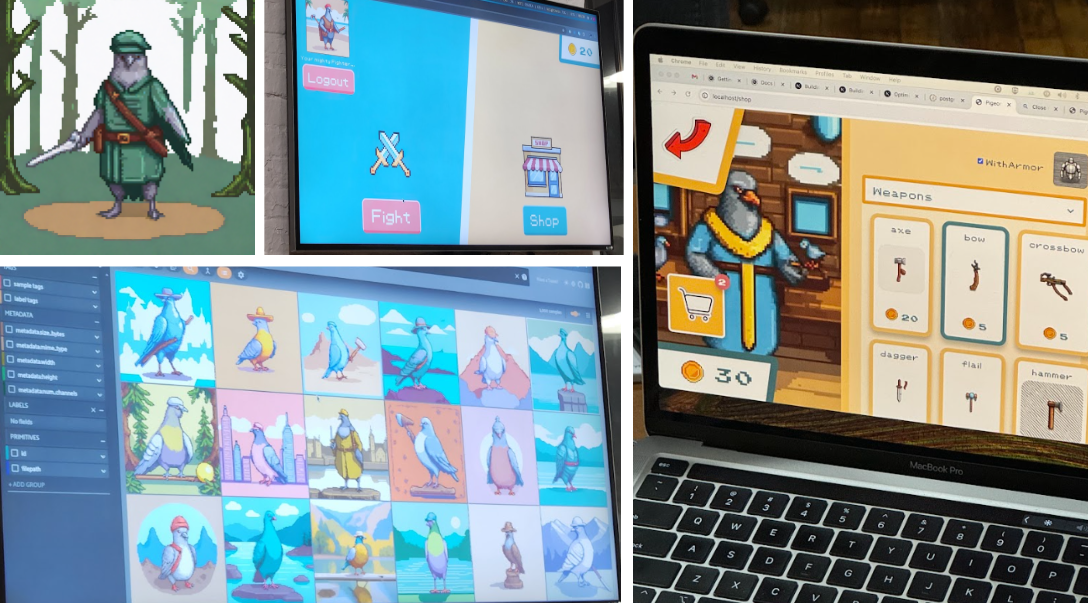

Have you ever dreamed of designing a combat pigeon using AI and pitting it against other pigeons in a Pokemon-style arena? The team behind Pigeon Fury Kombat sure did.

In this innovative game, generative AI was used to create custom pigeon images in a 16-bit video game style based on tags selected by players. The tags allowed for choices of weapons, hats, backdrops, and more. Once created, a player’s pigeon became eligible for combat. Players were paired up in order of arrival (e.g., the first two players to click “Fight” would be pitted against one another), and a fight lasted until either:

a) a pigeon’s health dropped to zero or

b) a player quit the fight by leaving the page.

Winning a fight granted the player gold, while losing a fight meant the permanent loss of the player’s pigeon, requiring the player to use their remaining gold to purchase a new pigeon to continue playing.

The team was familiar with the technologies used—Go and Python on the back end, React (with TypeScript) on the front end—but building a live, arena-style game based on WebSockets presented plenty of challenges. These included keeping countdown timers in sync, managing player connections and pairings, and ensuring the game state remained consistent.

To create the custom pigeon images, the team utilized a Stable Diffusion XL model fine-tuned to generate pixel art. The goal was to fine-tune the model specifically for pigeon images using thousands of curated pigeon photos. However, they ran into issues during the fine-tuning process and were limited on time. Despite these challenges, the pixel art model provided satisfactory results for the game.

Generative AI is poised to play a huge role in gaming in the near future, with AI agents and generated content becoming increasingly prevalent. Pigeon Fury Kombat gave the team a great opportunity to understand how diffusion models work and demonstrated the potential of combining gamification with generative AI. It's an exciting field, and they aim to explore it further in the future.

Team 4: Montreal CycleSight

Beatrice Lopez, Bradley Campbell, Eric Rideough, Erick Madrigal

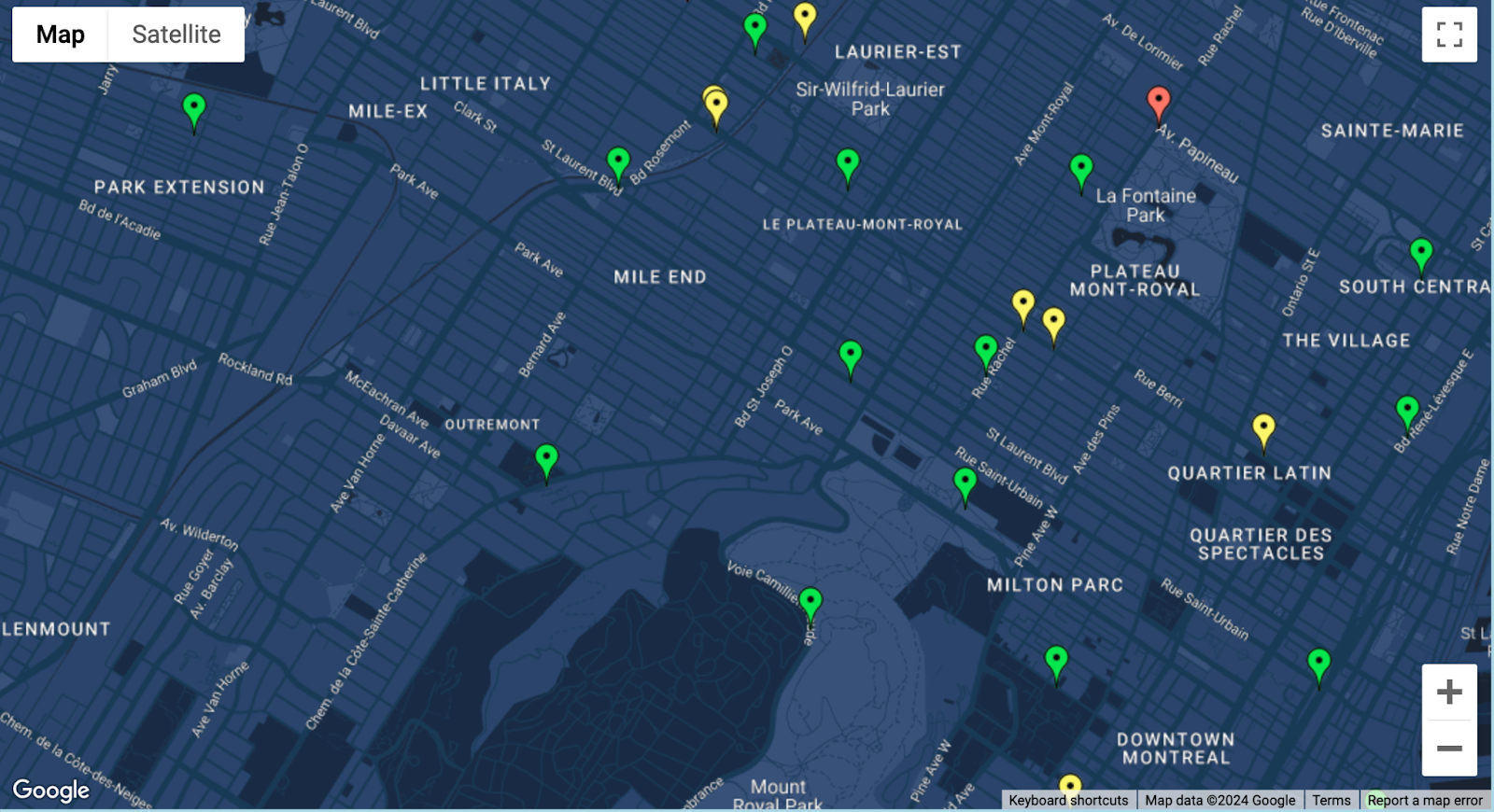

The Montreal CycleSight team set out on a goal to develop AI models to predict cycling traffic patterns and trip durations in Montréal. Using time-series bicycle traffic counter data from Montréal Données Ouverts, they aimed to forecast traffic patterns on the cycling network. Additionally, they used trip history data from Bixi to estimate trip durations between two points on the Bixi network.

Preparing the data for training required extensive cleaning and organization to ensure accuracy. The team used Python libraries like pandas and numpy to load and process CSV files. Modal was instrumental in training their models, allowing them to write code locally and leverage remote GPUs to handle the large datasets and train the models efficiently.

For traffic prediction, the team used Prophet, a tool designed to handle seasonal variations, which is crucial for cycling patterns in Montreal. Prophet allowed them to incorporate factors such as the day of the week and holidays, enhancing their predictions. They trained a model for each traffic counter, which could predict future traffic counts based on a given date and time. This data could then be visualized on a map, indicating high, medium, or low traffic areas.

For Bixi trip duration time prediction, they used MLPRegressor and a subset of the 2023 Bixi trip history data. They extracted several features from the data, including the day of the week, time of day, and estimated trip distance, to predict trip duration time.

The team initially thought that having over 10 years of data would be advantageous for training their models. However, they quickly realized that a higher volume of data requires a lot of cleanup and more resources for training. For instance, there were over 11 million Bixi trips recorded in 2023 alone. They spent significant time refining this data, focusing on the most relevant trips recorded during the summer months to make their models more manageable and efficient. In the end, they used only about 5000 trips to train their model.

This project highlighted the importance of thorough data preparation and the power of AI in enhancing urban mobility. The team's hackathon journey demonstrated the potential of AI-driven solutions in urban planning, paving the way for smarter and more efficient cities.

Team 5: Spot Draws What You Ask

Carl Lapierre, Cassandre Pochet, Robin Kurtz

For team 5, the drive behind this project was foremost to achieve something cool with our Boston Dynamics Spot robot. They knew they wanted to control it, and they knew they needed to use AI to get to the final result. In the end, they came up with the idea to command Spot to draw an object by itself using diffusion models.

They used various technologies to achieve their solution. First, they used Whisper's base model for simple speech-to-text. For their use case, they had no issues implementing this except for some misunderstandings with Cassandre's French accent—nothing that couldn't be adapted to resolve! Once they had their text, they used OpenAI's GPT-4o to identify the "target" object to draw.

With the object defined, Carl used Stability.ai's SDXL - Text to Image model to create an image. Because they had limited time, they were not able to fine-tune the model to create images that were more easily drawn by Spot. With this blocker, they used additional tools to achieve something that worked for them. Using OpenCV, they processed the generated image: grayscale, Gaussian blur, Canny edge detection, dilated the edges to reduce line thickness, and then converted it to SVG with other Python packages.

They opted to generate four images to choose from, which gave them the opportunity to use OpenAI's GPT-4o again to identify the "best" image out of the four. They asked the model which choice was the "easiest to draw" and the most similar to the original target object. This gave the user a highlighted result to send over to Spot.

Once the SVGs were created, they needed to convert them to G-code—the most widely used format for computer numerical control (CNC) and 3D printing—for Spot to draw. For this, they used out-of-the-box Python logic. Here they had some issues with the end results simply being too complex for Spot to draw well. They noted that with its limited arm mobility, simpler and shorter (less detailed) G-code drew better. To achieve this, they initially deleted nth lines in the resulting G-code. Later, Carl replaced this logic with an implementation of the Ramer–Douglas–Peucker algorithm, which offered much better results.

The final step, handled by Robin, was to send Spot the G-code to draw. Luckily, they were able to start with Boston Dynamic’s arm_gcode example. By adding an API layer to be triggered from their front end, and after some tweaks, they could now ask Spot to draw an object from an audio command. This step came with a few difficulties, such as the pressure of the marker on the "canvas." Setting it too high ruined the marker and made for a more jittery drawing, while setting it too low meant the marker wouldn't hit the canvas. Spot's lack of mobility with its arm—while very practical in industrial settings for manipulating its environment—meant they needed to rely on Spot's ability to walk in all directions elegantly to draw the object with the arm fixed in front.

This was a super fun project. Carl, Cassandre, and Robin were able to achieve their goal and produce a working proof of concept that can be used by anyone to draw whatever you—and our selected generative AI model—can imagine.

The 16-hour AI hackathon at Osedea was more than just an event—it was a celebration of creativity, collaboration, and cutting-edge technology. Each team's project demonstrated the limitless possibilities when talented minds come together, driven by a shared passion for innovation. From deciphering complex parking signs to crafting personalized travel experiences, creating combat-ready pigeons, predicting urban cycling patterns, and commanding a robot to draw anything, the hackathon was a testament to the power of AI in transforming ideas into reality. The projects, though diverse, shared a common thread of pushing boundaries and exploring new frontiers in AI and software development. As we look back on this event, we are inspired by what we have achieved and are excited for the future innovations that lie ahead. At Osedea, we remain committed to fostering an environment where creativity and technology converge, continually driving us to shape the future of software development and AI. If you have an AI product idea, or if you’re interested in learning more about how AI can help solve problems in your industry, we invite you to work with us!

Did this article start to give you some ideas? We’d love to work with you! Get in touch and let’s discover what we can do together.