Innovation

The Agent Revolution: 2025 recap & 2026 outlook

Yesterday I found myself looking back at old agentic projects from the prehistoric age of LLMs (2 years ago) and it really struck me how much the ecosystem has evolved. I went from hacking together hacky pizza ordering agents for drive-throughs with GPT 3.5 to shipping production-grade agents through enterprise ERPs. From speaking at WeAreDeveloper in Berlin to Confoo here in Montreal and NDC London, I've been fully tapped into the agent zeitgeist.

Everyone hyped 2025 as "the year of the agents," and boy, did it deliver. Looking back at how much the ecosystem changed in just twelve months feels like watching a time-lapse of a city being built. Infrastructures emerged, standards solidified, and what were experimental hacks became production-grade systems almost overnight. So with all that time in the trenches, here's my little recap on what made 2025 so pivotal and what I think 2026 might actually look like.

The Foundations Were Laid Faster Than Anyone Expected

Consider this: the Model Context Protocol (MCP) is barely a year old. Anthropic open-sourced it in November 2024, and by early February 2025, it had already become the standard context protocol for agent infrastructure. That's adoption velocity that would make any technology founder weep with joy. Just this past month, MCP celebrated its first birthday with the launch of MCP 2.0, bringing code execution and multi-tool calling capabilities that fundamentally change how agents orchestrate complex workflows.

In a surprising twist: major tech firms didn't just watch from the sidelines or try to develop their own protocol, they went all in on MCP. Google recently announced fully managed MCP server endpoints for its entire cloud stack, from Maps to BigQuery, making their services "agent-ready by design." Instead of developers writing brittle custom connectors that break every time an API changes, an AI agent can now plug into Google's APIs by pointing to a standardized MCP endpoint. That's the kind of interoperability we want for agents.

And then there was "vibe coding". The term itself isn't even a year old. Andrej Karpathy coined it in February on X to describe that moment when you give up trying to micromanage the coding agent and just vibe with it. Now it's deeply engrained in business decisions, development workflows, and prototyping sessions. Sure, it's sparked heated debates about technical debt accumulation, but the genie is out of the bottle. We're coding differently now, and there's no going back.

The Creative Explosion

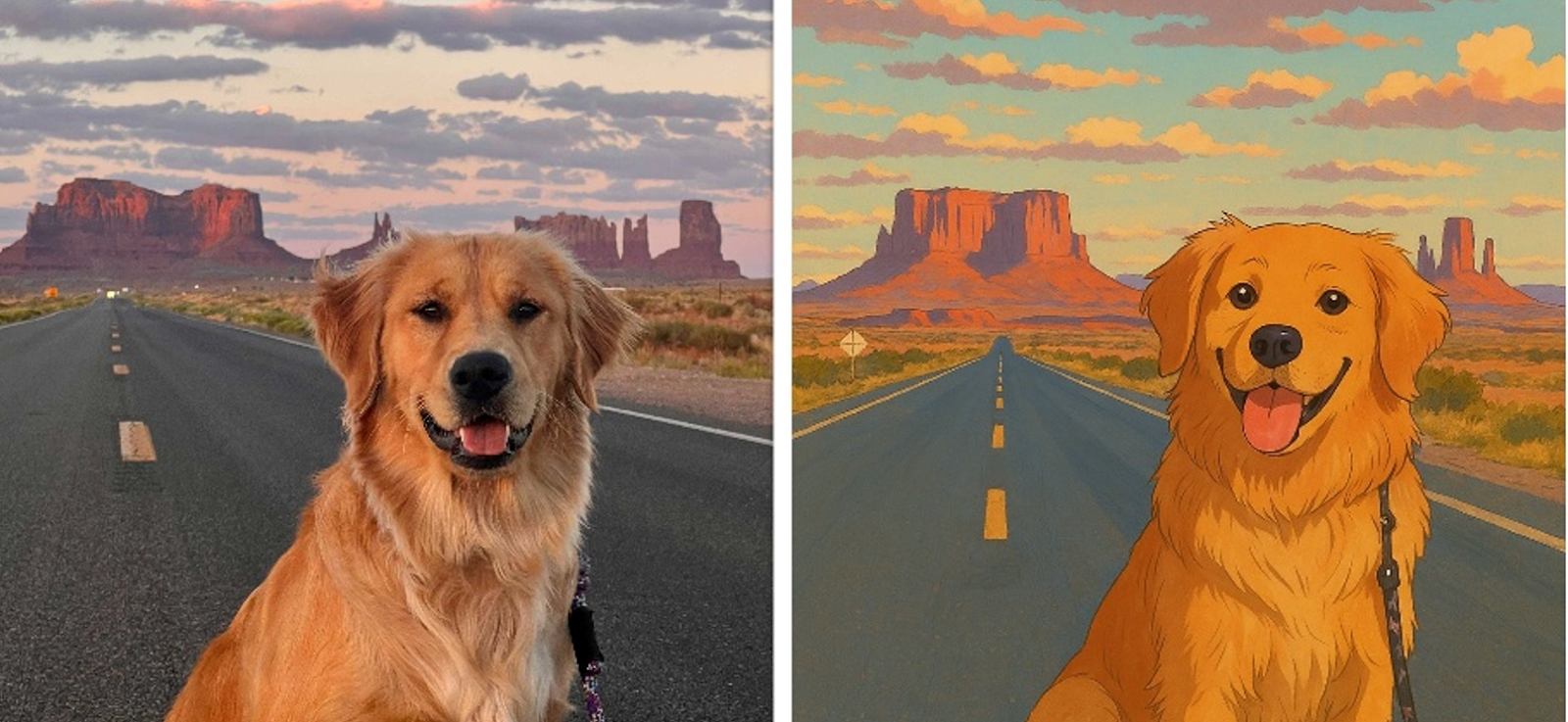

Though not technically on the topic of agents, I felt this was worth noting. Shortly after vibe coding entered our lexicon, we witnessed the "ghiblification" of the internet. OpenAI's groundbreaking image generation model finally cracked perfect text-in-image and style transfer, making every marketer's Pinterest board suddenly achievable. But that was quickly dwarfed by the late summer release of Nano Banana and Nano Banana Pro, which took image editing to photoshop-like precision without losing fidelity to the source image. As someone who can barely use GIMP properly, watching AI handle complex image edits feels like technological sorcery. I spent TWO DAYS ghibilifying images of my dog.

The video generation space exploded too. We went from Veo3 to Sora, which added synchronized sound and effects, to Cameos that brings your face into videos in ways that are both impressive and deeply unsettling. Netflix used AI-generated content in productions this year, Coca-Cola ran its second consecutive Christmas campaign with generated ads, and Disney struck a deal with OpenAI to include Disney characters in Sora. And just when you think the pace might slow down, Meta drops their state of the art SAM 3D Model, enabling image-to-3D generation that unlocks entirely new dimensions of creative potential.

The Language of Agent Development Matured

The agent development space matured so quickly that concepts that were foreign jargon at the start of 2025 are now deeply embedded in technical conversations. Prompt engineering and retrieval augmented generation (RAG) went from research papers to baseline expectations. But more interestingly, we've seen the rise of context engineering, a term that encapsulates most of the agentic system design discipline while adding much-needed structure to the nomenclature.

Context engineering gave us a vocabulary to discuss problems we were all experiencing but couldn't quite articulate. Terms like context compaction, context rot, and context poisoning allow us to better shape ideas and debug agent behaviors. It's akin programming getting design patterns, suddenly we could all talk about the same problems using the same language.

Anthropic has been at the forefront of this innovation wave with Claude Code, tackling the genuinely hard problem of deep agents capable of reasoning over a codebase for extended periods. They demonstrated concepts like autonomous context compaction, where the context gets intelligently summarized past a certain threshold, enabling those super long multi-turn conversations that would otherwise hit context limits and lose coherence.

Then in the fall, Anthropic introduced another crucial concept: agent harnessing. This refers to the software scaffolding, control loops, and structured environments built around an AI model so it behaves like a reliable autonomous agent. The harness essentially battles the "memento" effect (that movie where the guy wakes up with no memory but looks at his tattoos for context) that agents experience when starting a new thread, helping them refocus on the overarching goal with proper context alignment. As their engineering team put it in their documentation, harnessing is what transforms a stateless model into a stateful, goal-oriented agent that actually gets things done over time.

Open Standards For The Agentic Space

Here's where things get really exciting for 2026 and beyond. In December 2025, Anthropic donated MCP to the newly formed Agentic AI Foundation (AAIF) under the Linux Foundation umbrella. The AAIF launched with contributions from Anthropic, OpenAI, and Block, combining projects like MCP, OpenAI's AGENTS.md spec for coding agents, and Block's "goose" local-first agent framework. This open governance model signals that agent development has graduated from proprietary hacks into a collaborative ecosystem with serious institutional backing.

The heavy hitters are all in: AWS, Google, Microsoft, Anthropic, and OpenAI are all AAIF members. As the Linux Foundation stated, "Agentic AI represents a new era of autonomous decision making that will transform industries," underscoring that open governance is essential to ensure this technology evolves transparently and stably.

According to the Linux Foundation, MCP already has over 10,000 published server endpoints covering everything from developer tools to Fortune 500 databases. It's been adopted in platforms ranging from Microsoft Copilot to OpenAI's ChatGPT to Google's Gemini. In 2026, we're looking at true interoperability: agents built on different frameworks or clouds will speak a common "tool API" language. No walled gardens, no proprietary lock-in. Just standards.

More Complex Multi-Agent Systems

As individual agents become more capable, we're witnessing the rise of multi-agent collaboration and richer long-term memory. A single AI agent handling simple tasks is useful, but complex, multifaceted problems benefit from specialized agents working in concert. Salesforce's research noted that leading companies are "shifting from monolithic AI to an orchestrated workforce model," where a primary orchestrator agent delegates subtasks to smaller expert agents, much like a human manager coordinating a team.

In 2026, these multi-agent systems will hit mainstream deployment. A lone chatbot that only answers questions will be seen as what Salesforce calls "a dead-end island of limited value," whereas a network of agents collaborating across departments and even across organizations can tackle end-to-end workflows.

This opens fascinating doors for cross-enterprise collaboration. Traditionally, organizations set up multi-agent systems hierarchically, but now we can have top-level agents representing entire organizations, negotiating and collaborating with other organizational agents. This is where something like Coinbase's x402 protocol becomes relevant. It's an open standard for internet-native payments that could enable agents to offer pay-per-use services, allowing one agent to delegate work to other autonomous agents without needing all capabilities in-house. Imagine an agent hiring another agent's specialized service, paying in microtransactions, all without human intervention.

Less Framework, More Observability

A recently published study by IBM called "Measuring Agents in Production" found that 85% of detailed case studies forgo third-party agent frameworks, opting instead to build custom agent applications from scratch. This aligns with my personal experience in this space as well. As agentic loops evolve and context engineering becomes more important, there's evident value in orchestrating your steps yourself rather than fighting against framework opinions.

Additionally, there's a deep realization emerging: in complex agentic systems, observability is no longer optional, it's foundational. We'll see more emphasis on agent evaluations and observability standards using OpenTelemetry, semantic conventions, and distributed tracing. Anthropic's recent report "How enterprises are building AI agents in 2026" details this growing complexity: "The shift from task automation to process orchestration represents a fundamentally different use case and a different value proposition. Organizations that master multistage and cross-functional agent deployments can unlock advantages in speed, consistency, and scale that simple automation can't."

Currently, according to the IBM report, 74% of production agents rely primarily on human evaluation, with more than half also using "LLM as judge" approaches but always with human verification. Only a minority build formal benchmarks, many teams rely on A/B tests, expert feedback, or custom tests. There's enormous room to improve agent evaluation methodologies, and I expect 2026 will be the year we crack this problem.

Security, Governance, and the "Agent as Employee" Mindset

Microsoft's security leadership argues that "every agent should have similar security protections as humans" in an enterprise context. This means deployed AI agents will each have a digital identity and access control limits, just like a human employee. Companies in 2026 will treat agents as first-class entities in identity management systems with unique credentials, audit logs of their actions, and permission boundaries. An agent might be granted permission to view certain data but not modify it, or to execute transactions up to a limit.

Organizations are implementing monitoring and guardrails where every action an agent takes can be traced and requires justification. Abnormal behavior like attempting unauthorized access will trigger alerts or automatic shutdown. We'll see widespread use of sandboxing and policy enforcement layers, where agents can only perform whitelisted operations and sensitive data they produce gets automatically classified or redacted.

One concrete trend emerging is instruction adherence as a key metric for autonomous agents. Rather than just evaluating whether an agent produces correct output, companies will track how well agents follow directives and constraints. Experts predict the industry will adopt probabilistic adherence scores, essentially a rating of how likely an agent is to comply with guidelines, as a standard for AI governance. This echoes the shift from experimental to production mindset: reliability and predictability are paramount if agents are to be trusted with autonomous decisions.

The Rise of Specialized Small Language Models

Fine-tuned small language models will become more prevalent in enterprise agent deployments in 2026: As use cases flesh out and move to production, especially for on-premises compute and compliance requirements, effective harnessing and context engineering will place greater importance on inference speed, token optimization, and cost reduction.

With use cases gaining production usage and observability systems in place, golden datasets can be collected and used for downstream fine-tuning, increasing accuracy and reducing costs. The large language and reasoning models will often handle the master control of an agentic workflow, but purpose-built SLMs will very adequately deliver required accuracy and efficiency when trained for their dedicated job within the workflow. Across the board, businesses will understand the importance of their own data in driving AI value, and fine-tuned SLMs are key to unlocking that value in mature agentic solutions.

From Experiments to Operations

If 2025 was about experimenting with AI-powered assistants, 2026 will be about operationalizing AI agents as an everyday part of business. Done right, these agents can relieve employees of drudgery, unlock new levels of productivity, and open up creative ways of working that weren't possible before. A finance team might close the books in seconds with an army of AI helpers, or a customer service department might offer genuine 24/7 instant resolution through a network of smart agents that actually understand context and can take action.

The infrastructure is in place. The standards are being adopted. The security frameworks are maturing. The tools are getting better every month. We've moved from "can we build agents?" to "how do we deploy them responsibly at scale?" That's a fundamentally different question, and it's the right question to be asking.

So as we move through 2026, keep your eyes on cross-enterprise agent collaboration, the maturation of observability standards and the rise of fine-tuned SLMs. If your organization is looking to move beyond experimentation and into production-grade agent deployments, we'd love to help. Whether you're exploring how agents might fit into your workflows or ready to integrate them into your systems, we can help you navigate the complexity, avoid the pitfalls, and build something that actually works. Interested in chatting about what agentic AI could unlock for your organization? Reach out and let's figure it out together.

Did this article start to give you some ideas? We’d love to work with you! Get in touch and let’s discover what we can do together.

-min.jpg)